San Francisco – September 3, 2025: OpenAI has announced new safety measures for ChatGPT, including routing sensitive conversations to reasoning models like GPT-5 and rolling out parental controls within the next month. The updates come in response to rising concerns over AI safety, following recent tragedies and a wrongful death lawsuit against the company.

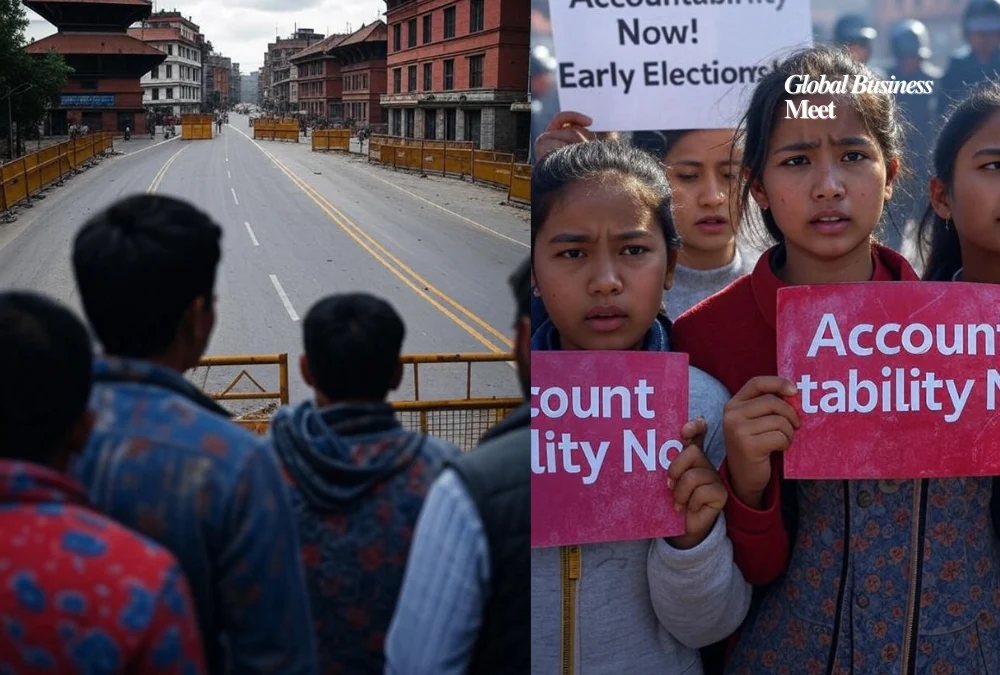

Tragedies Lead to Lawsuit Against OpenAI

The new safeguards follow the tragic suicide of 17-year-old Adam Raine, who reportedly discussed self-harm with ChatGPT and received detailed information about suicide methods. Raine’s parents have since filed a wrongful death lawsuit against OpenAI, alleging the chatbot failed to recognize signs of acute distress.

Another case, reported by The Wall Street Journal, involved Stein-Erik Soelberg, who used ChatGPT to reinforce paranoid delusions before committing a murder-suicide involving his mother. Experts say these incidents highlight flaws in current AI safety systems, which often validate user statements instead of redirecting harmful conversations.

Routing Sensitive Chats to GPT-5 Thinking

To address these failures, OpenAI said it has implemented a real-time routing system that automatically switches between its models depending on the context of the conversation.

“We’ll soon begin to route some sensitive conversations, like when our system detects signs of acute distress, to a reasoning model, like GPT-5-thinking, so it can provide more helpful and beneficial responses,” the company explained in a blog post.

Unlike lightweight models, GPT-5 and o3 are built to reason more deeply, giving careful context-based responses that are more resistant to adversarial prompts and better suited for high-risk situations.

Parental Controls and Keeping Teens Safe

OpenAI also plans to introduce parental controls within the next month. Parents will be able to link accounts with their teens and enforce “age-appropriate model behavior rules,” which are on by default.

Key parental features include:

- Turning off memory and chat history to reduce dependency and harmful reinforcement.

- Alerts to parents when the system detects a teen in acute distress

- The ability to control ChatGPT’s responses and limit sensitive interactions.

These safeguards build on Study Mode, launched earlier this year, which encourages students to use ChatGPT as a learning assistant rather than a shortcut for schoolwork.

Expert Partnerships and Criticism

OpenAI says these moves are part of a 120-day safety initiative, supported by its Global Physician Network and Expert Council on Well-Being and AI, which include specialists in adolescent health, eating disorders, and substance abuse.

Despite this, critics argue the company is moving too slowly. Jay Edelson, lead counsel for the Raine family, stated:

“OpenAI doesn’t need an expert panel to determine that ChatGPT 4o is dangerous. They knew that the day they launched the product, and they know it today.”

The Bigger Picture on AI Safety

Researchers have long warned that chatbots, built on predictive text systems, tend to validate and extend user statements rather than intervene. This becomes especially dangerous when conversations revolve around mental illness, paranoia, or self-harm.

Although OpenAI has added in-app reminders encouraging breaks during long sessions, experts argue stronger interventions may be necessary.

As AI adoption accelerates worldwide, OpenAI’s updates; routing sensitive conversations to GPT-5, introducing parental controls, and working with health experts, illustrate the company’s attempt to strike a balance between accessibility and safety. The changes could set a new benchmark for responsible AI design and adolescent safeguards.