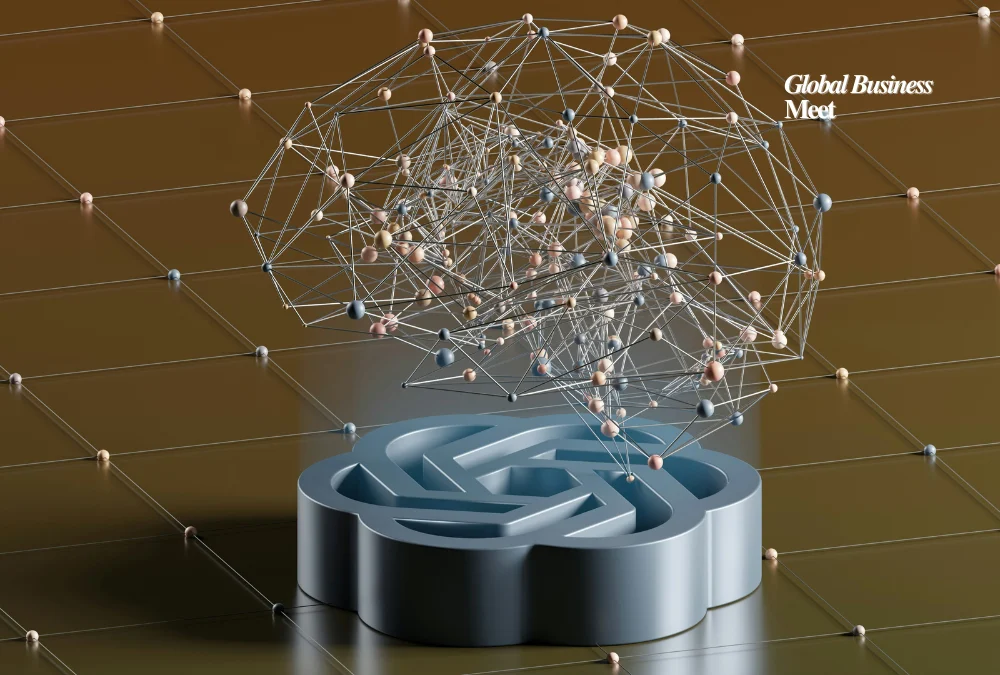

Cloud-native infrastructure and open-source important tools are becoming critical to the emergence of agentic AI or AI systems that can automatically execute multi-step tasks. Such a methodologies approach scalable architectures with collaborative development, allowing AI workflows to adapt, execute, and iterate in the absence of human involvement.

The cloud-native model is the core of the modern agentic AI, offering elastic compute resources, resilient container orchestration (such as Kubernetes) and serverless functions which can dynamically scale with demand. These components enable AI agents to generate new sub-agents dynamically, e.g. a task planner, execution module or monitoring component, and to coordinate them in parallel without manual setup. This elasticity does not only speed up development, but it enables workflows capable of supporting unpredictable workload and the complexity of the real world.

This is augmented by open-source frameworks, which provide developers with blocks that can be customized. Such platforms as LangChain, LlamaIndex, and Ray are becoming popular to organize AI pipelines: chaining prompt-based agents, controlling memory and context, distributing work, and dealing with failures. Since they are publicly viewable and customizable, teams can make changes, submit enhancements, and prevent vendor lock-in-encouraging a community-powered enhancement of speed.

The micro-agent architecture, in which a separate agent is in charge of a specific task, e.g. data retrieval, summarization, environment interaction, or integration with third-party tools, is one of the emerging patterns. This modularity resembles microservices in conventional backends, however, it is layered with AI. When the APIs are well defined, the micro-agents can be orchestrated by a central dispatcher, allowing collaborative, dynamic problem-solving at scale.

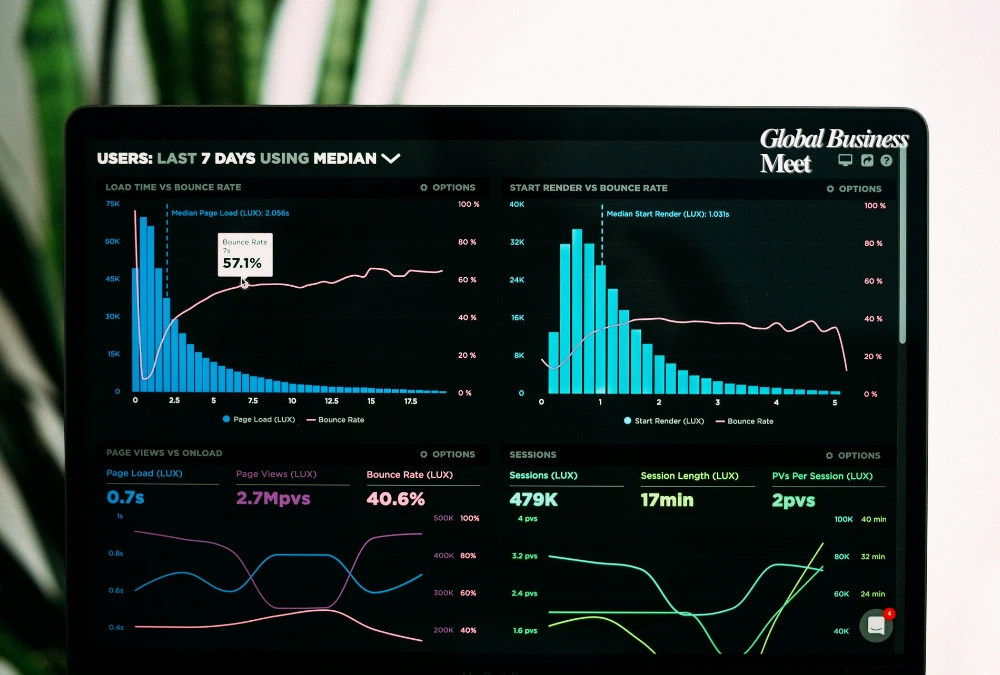

In addition to orchestration, what matters are observability and reliability. Agentic stacks include open-source instrumentation, such as OpenTelemetry and Prometheus, to observe task execution, task latency, and error rates. When metrics enter the system, the system can retrain, reroute, or redeploy, in the event of a breakdown–leading to resilient workflows unexpected inputs or service disruptions.

Industries range from fintech (workflows to validate transactions against SAP agents); customer service (bots that draw on documentation and can respond contextually); and bioinformatics (pipelines to curate and synthesize protein research across many databases).

To sum up, the alignment of cloud-native infrastructure and open-source agent frameworks drives the movement toward genuinely self-governing AI systems. Containers and serverless developer tooling, and micro-agent composition all give developers more flexibility, and the open-source transparency can drive innovation and create trust in the community. With the development of agentic AI, such technologies will enable scalable, reliable, collaborative AI ecosystemes that can orchestrate high-level tasks without direct human involvement.