OpenAI also agreed to a historic contract to pay Oracle approximately up to $30 billion annually in broad data center services that marks a significant change in cloud infrastructure strategy of the company. The decision marks both a colossal computer demand by OpenAI to enable the next generation of AI models and a growing position of Oracle as a high-performance cloud giant.

With the agreement touching upon a multi-year contract, Oracle will run and operate special hardware farms, with high-density GPU configurations customized to handle AI-scale workloads. about Infrastructure provisioning of data management up to high speed networking and cooling energy optimized is part of it. OpenAI is hoping that the acquisition will mean a considerable increase in the resources needed to train and deploy models such as GPT-4.5, GPT-5, and more.

Performance infrastructure is also a core business requirement of AI leaders, and the strategic focus that OpenAI has with Oracle demonstrates the desire this business has to take on larger rivals including AWS, Azure, and Google Cloud. Oracle, which has generally been a company that can be characterised as a giant in the enterprise, is developing its own high-end cloud portfolio in order to keep AI-first businesses on board of its services- this deal is a demonstration of that.

In the example of OpenAI, the decision to support a sole cloud vendor has become an area of weakness due to the increased compute demands. Moving into the infrastructure of Oracle not only creates redundancy, it allows one to be visible in cost and possibly negotiate these costs as AI prices rise.

In its turn, Oracle enjoys the reputation of being home to one of the high-profile AI companies in the world. The deal is anticipated to capture privileges to the Oracle AI-specialized service levels, controlled Kubernetes platforms, and accelerated security options, providing OpenAI a predictable, optimized venue on which to execute its models.

Experts in the industry observe that the deal to sign a $30B contract with a cloud vendor is almost unheard of. It is also an indication of AI innovators making long-term infrastructure guarantees with surging GPU demand. Optimizing performance and cost efficiency turn out not only useful but crucial as OpenAI scales in training trillion-parameter models.

Nevertheless, there are risks of such a huge contract. OpenAI should guarantee that Oracle renders SLAs of uptime, security, and non-bottlenecks performance. Oracle too needs to provide regular improvements to remain competitive over its cloud-native peers. The weakness in infrastructure provision would have a direct influence on the OpenAI product development schedule and competitive advantage.

It is also probable that this collaboration will harden the cloud wars. AWS, Azure, and Google Cloud are already reacting and have already begun to release optimized AI-hardware, custom chips, and packaged-service options. The fact that Oracle can push off well-established providers is shifting the balance in the competitive environment among businesses and AI-focused startup companies.

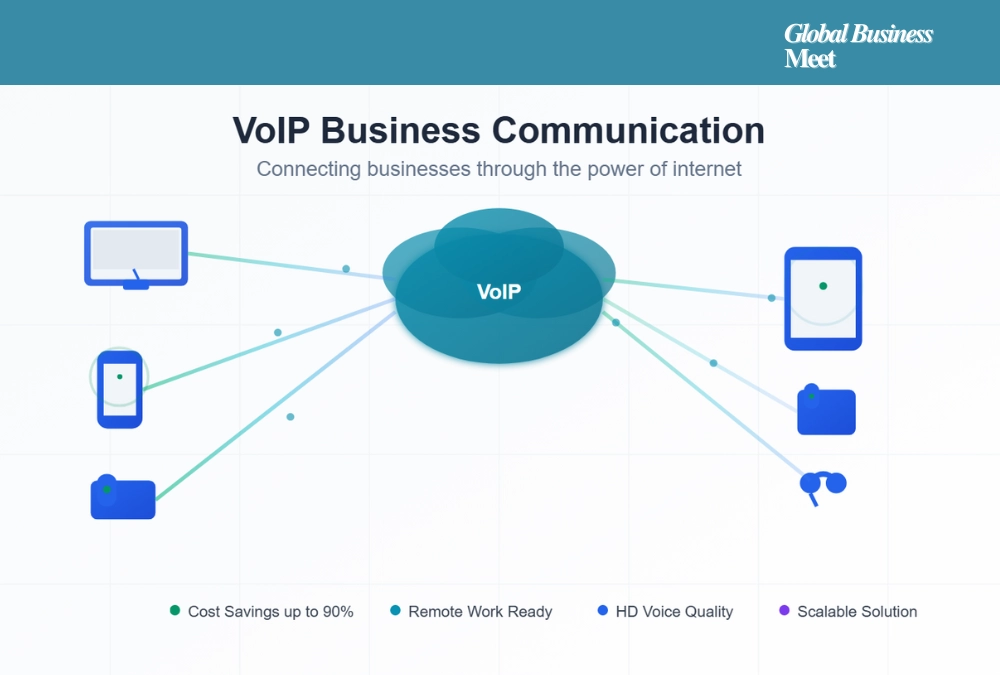

In addition to compute, another wider trend is represented by the deal, a shift toward focused AI strategies on infrastructure. Business organizations are no longer using cloud platforms generally and are moving to purpose-made environments with the capability to carry out AI science, training, and inference. The Oracle collaboration in OpenAI can encourage the infusion of comparable alliances in other industries that are driven by machine learning on mass.

Procurement and economics might also be affected by the agreement. Purchasing compute at a scale of tens-of-billions of dollars (per year) increases the bargaining power so that OpenAI is able to negotiate specialized hardware, capacity reservations, and joint R&D work with the Oracle engineering team. This can give rise to co-development projects, which will turbo-charge AI-related hardware development.

Nonetheless, the purchase throws some concerns regarding lock in, flexibility and transparent pricing. Long-term partnerships are stable and are capable of reducing agility and dependency. OpenAI will also have to make its expansion flexible in nature – constructing multi-cloud agility concomitantly with contracting scalability.

To conclude, the swerving to use the data center services of Oracle compromises up to 30 billion dollars per year, which is a groundbreaking conclusion concerning the AI infrastructure strategy of OpenAI. It demonstrates the cloud ambitions of Oracle, strengthens high-expectation economics at GPU compute and indicates a new generation of so-called cloud partnerships being designed to accelerate AI. This collaboration can remodel the way the two industries treat infrastructure, competition, and innovation as AI grows in scale.