The Ideology Behind the AI Empire

Every empire is fueled by an ideology that justifies its expansion, even when that expansion contradicts the very ideals it claims to uphold. For European colonial powers, it was Christianity and the promise of saving souls while exploiting resources. For today’s artificial intelligence industry, the driving ideology is artificial general intelligence (AGI), presented as a vision that will “benefit all humanity.” OpenAI has become the most visible evangelist of this belief, reshaping the way the industry builds AI.

Journalist and bestselling author Karen Hao explored this in her book Empire of AI. Speaking on TechCrunch’s Equity podcast, Hao recalled interviewing researchers whose voices trembled with conviction about AGI’s inevitability.

OpenAI’s Global Power

Hao describes the AI sector, particularly OpenAI, as an empire. In her words, “The only way to really understand the scope and scale of OpenAI’s behavior is to recognize that they’ve already grown more powerful than almost any nation-state in the world.” She argues that OpenAI has consolidated enormous economic and political power, shaping geopolitics and daily life in ways that rival governments.

OpenAI has defined AGI as “a highly autonomous system that outperforms humans at most economically valuable work.” It promises to elevate humanity by creating abundance, boosting economies, and driving scientific discovery. Yet these promises remain vague, and they have justified an industry that consumes vast resources, from oceans of scraped data to enormous amounts of energy, while releasing systems that often prove risky or incomplete.

Choosing Speed Over Safety

Hao believes this trajectory was not inevitable. Advances could have come through improving algorithms to reduce data and compute requirements. But OpenAI chose speed as its priority. “When you define the quest to build beneficial AGI as one where the victor takes all, then the most important thing is speed over anything else,” she said. This focus pushed efficiency, safety, and exploratory research aside.

OpenAI pursued scale by feeding existing techniques with more data and larger supercomputers, a strategy Hao calls “the intellectually cheap thing.” Other companies soon followed to avoid falling behind. With most top AI researchers now working in industry rather than academia, the discipline itself is shaped by corporate priorities rather than independent scientific exploration.

The Massive Cost of the AI Race

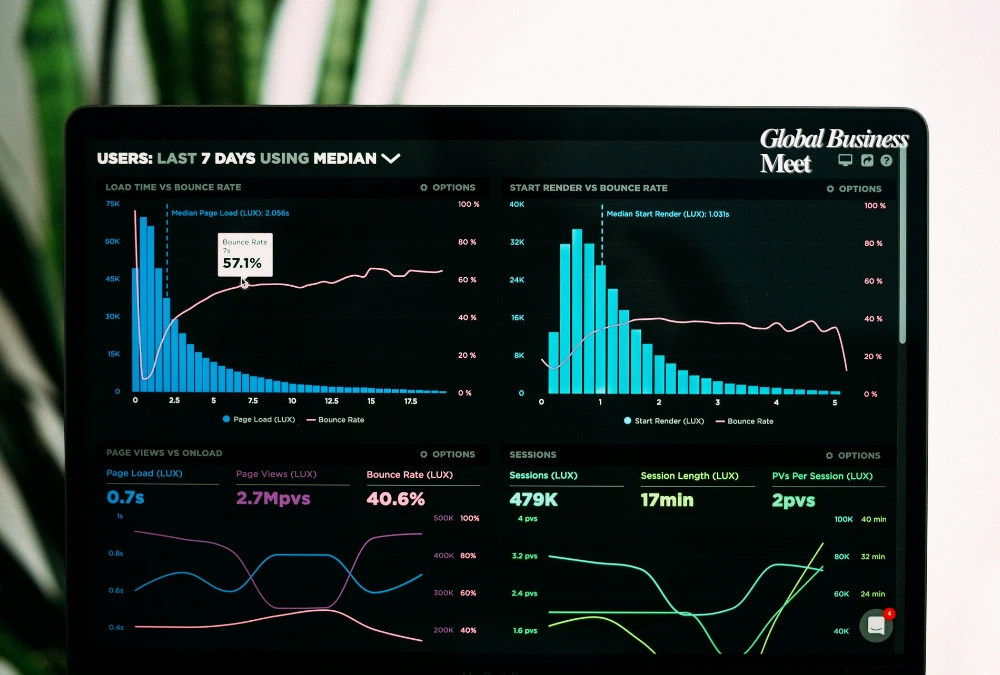

The financial commitment to this race is staggering. OpenAI recently projected it could spend $115 billion by 2029. Meta expects up to $72 billion in AI infrastructure investment this year, while Google anticipates up to $85 billion in capital expenditures for 2025, largely dedicated to AI and cloud expansion.

Despite this spending, many of the promised “benefits to humanity” remain elusive, while harms such as job displacement, concentrated wealth, and mental health crises linked to AI chatbots are already visible. Hao also highlights the hidden human toll: workers in countries like Kenya and Venezuela are paid as little as $1 to $2 an hour to filter disturbing material, including child sexual abuse content, for content moderation and data labeling.

Examples of Responsible AI

Hao emphasizes that not all AI follows this harmful path. Google DeepMind’s AlphaFold, for instance, accurately predicts protein structures using amino acid data. It has transformed drug discovery and disease research while avoiding the environmental, psychological, and ethical costs tied to large-scale language models.

“AlphaFold does not create mental health crises in people,” Hao said. “It does not require colossal infrastructure or rely on toxic internet datasets. It shows there are alternative ways to build impactful AI.”

Geopolitics and the Race Narrative

A common narrative driving AI’s rapid development has been the race against China, with Silicon Valley positioned as the force that would liberalize global politics. Hao argues the opposite has happened: the U.S.–China gap has narrowed, and Silicon Valley has had an illiberalizing effect on the world. The biggest beneficiary of this framing has been the AI industry itself.

OpenAI’s Mission in Conflict

OpenAI’s unusual structure as both a nonprofit and for-profit complicates how it measures its impact. Recent news of its deeper partnership with Microsoft, potentially paving the way to going public, has further blurred these lines.

Two former OpenAI safety researchers told TechCrunch they fear the organization now confuses its nonprofit mission with its profit-driven goals. Because millions enjoy using ChatGPT, the company frames this as proof of benefiting humanity, even when harms remain unaddressed.

The Danger of Blind Belief

Hao warns of the risks of being consumed by an ideology to the point of ignoring reality. “Even as evidence accumulates that what they’re building is harming people, the mission continues to paper over those harms,” she said. To her, this blind commitment to AGI reflects not only ambition but also a dangerous loss of perspective.