A significant deal has been made by OpenAI and Amazon Web services, which brought the AI models of the company, such as its GPT-4o Turbo and GPT-4o Vision models to be offered directly on AWS infrastructure. The strategic movement is the first step towards allowing official deployment of its models through third-party cloud provider, providing more flexibility and scalability options to developers and businesses in their efforts to integrate state of the art AI into their apps.

Wider Reach Using AWS

Earlier, the OpenAI models were available only via the OpenAI API and Microsoft Azure where OpenAI has preferred integrations. Now it is possible to deploy the OpenAI models in the local runtime on AWS where users can now infer on the AWS infrastructure and make local inferences without making centralized API calls where latency, sovereignty, or data residency is important. The alliance helps to facilitate full managed model hosting so that there is simpler deployment with integration of compliance and security.

Its application is particularly useful in enterprises using AWS with the preference to centralize costs and information.

Lets do inference with low latency near data sources.

Supports close coupling with AWS services such as SageMaker, Lambda, and cloud storage.

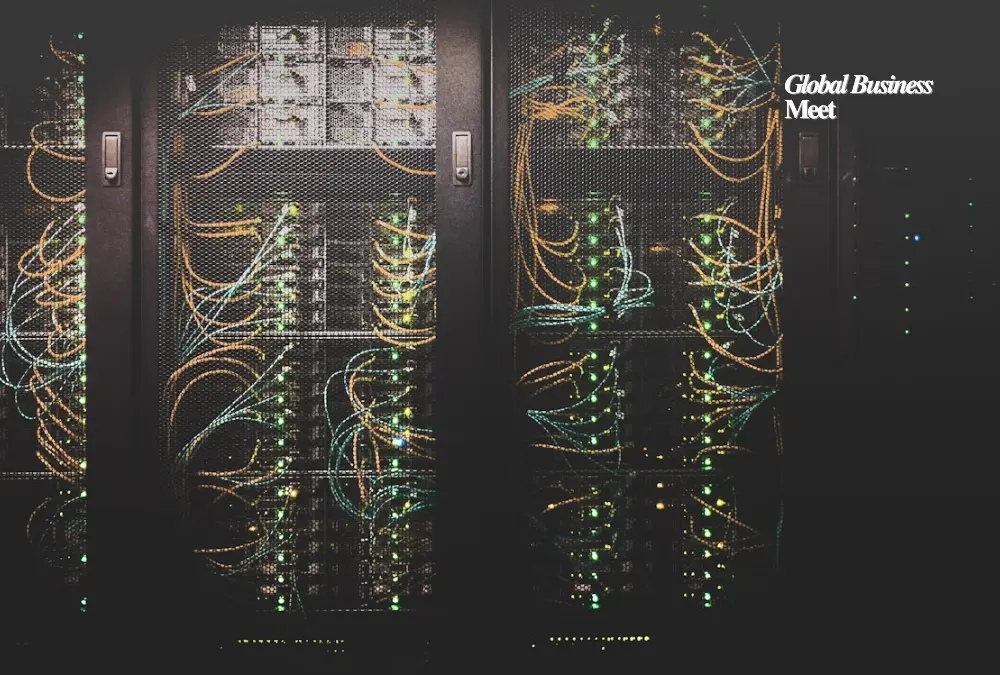

Complex Architecture: Models capable of running on your cloud

The workload of GPT-4o Vision and GPT-4o Turbo will be supported inferences on AWS hardware that will be optimized with NVIDIA H100/H200 GPUs with OpenAI.

Important facts are:

Hosted and configured by Amazon first party setup: to ensure performance and reliability.

Isolated security: in encrypted and compliant Virtual Private Clouds (VPC).

Scaling and Automatic updates: models updated to keep pace with our releases of OpenAI.

The configuration enables customers to operate AI workloads in their own AWS without having large data flows redirected to external sources; this is ideal where industry regulations are a concern and privacy is an organizational priority.

The Importance of AWS in OpenAI adoption.

The ruling strengthens the use of OpenAI enterprises in that it:

The access to the huge enterprise customer base of AWS with fintech, government, and healthcare industries.

Hybrid and multi-cloud AI workflows allowing developers to decide whether to use APIs or deploy locally.

Rivaling proprietary model hosting, such as Amazon Bedrock and Claude on AWS (Anthropic).

Effectively, OpenAI will have a wider reach whereas AWS will be able to bolster its AI functionalities that will position it as an end-to-end destination on generative AI workloads.

Use cases: What This Empowers

Customer support bots that work in real time and run locally to reduce latency.

Reading of documents in a regulated sectors (e.g., in the finance or legal sectors) where sensitive information should not be transferred outside of AWS-controlled environments.

AI powered functionality like vision-based analysis, intelligent code assistants that run alongside workflows on SageMaker or Lambda.

Scaled inference pipelines, using AWS that latency-sensitive activities are being proximate to production information stores.

Difficulties and Thoughts

In spite of its merits, this method has its caveats:

Cost: It is costly to host large models on the AWS since the price of GPU is hourly.

API parity: Certain of the newer features or refinements will initially appear on OpenAI or Azure.

Lock-in risk with a vendor: Enterprises are faced with the need to consider version control in multiple clouds.

Complexity of compliance: The organizations have to handle data residency, audit log, and integration in shared responsibility models.

Strategic implications of this situation on OpenAI and AWS.

OpenAI strengthens its business emphasis by delivering new deployment possibilities to regulatory and latency-sensitive factors

By providing high-end models as well as its own AI program, AWS becomes even more credible as a complete AI cloud vendor.

This agreement forms a precedent: other cloud services can also negotiate such a partnership in licensing- AI implementation across countries will become faster.

Among developers and CIOs, this new deployment platform allows them to be more flexible in the creation of intelligent and compliant systems without having to move to a different cloud provider.