The FAIR lab at Meta established five new projects which focus on developing artificial intelligence with human characteristics. The projects obtain their inspiration from the perception models of specialized AI as well as its language learning process and spatial understanding and operational behavior that leads to general-purpose AI systems.

1. Perception Encoder: Human-Like Artificial Intelligence Vision

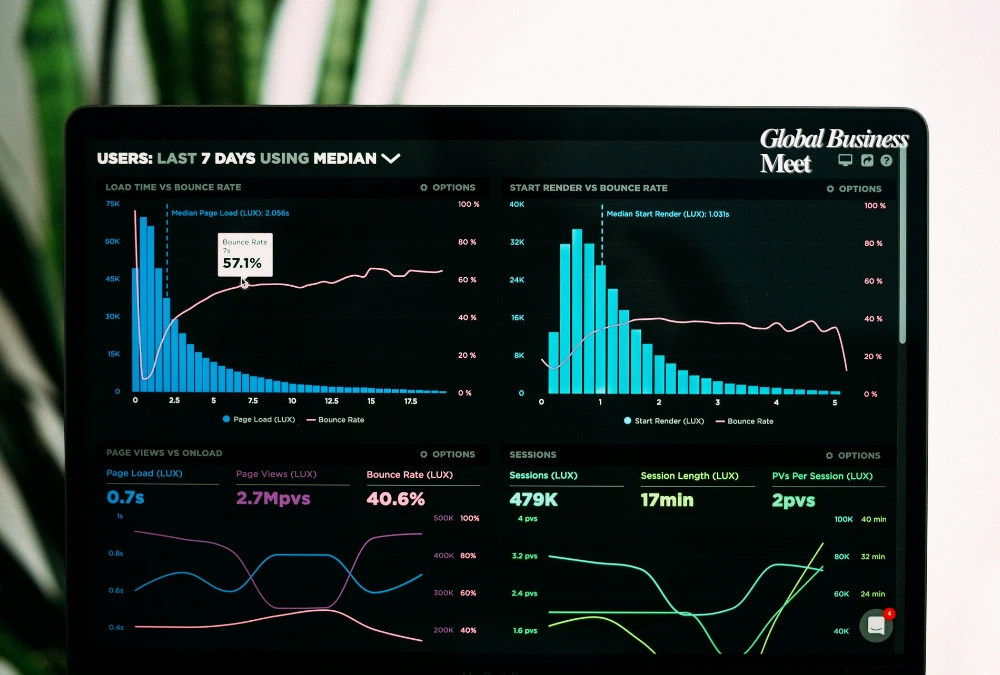

The vision luxury model of human-level sensitivity to vision data processing is named Perception Encoder. The system demonstrates superior performance than existing functionalities for both zero-shot retrieval and zero-shot classification functions. The system demonstrates the ability to recognize objects without receiving dedicated learning instructions for them. The model proves superior to contemporary systems for identifying both camouflaged animals and weak objects hidden in crowded scenes.

2. Perception Language Model (PLM):

Bridging the Gap Between Vision and Language. The Perception Encoder of the PLM uses a specific alignment mechanism which enables AI understanding of both images and videos within their proper contexts. Developers can benefit from the open-source version of Meta Locate 3D that resolves difficult vision-language challenges without relying on proprietary information.

3. Meta Locate 3D: Advanced Spatial Perception

The precise positioning and orientation abilities of artificial intelligence in three-dimensional space receive improvement through the Meta Locate 3D technology. The importance of space knowledge makes it crucial in robotic and augmented reality operations.

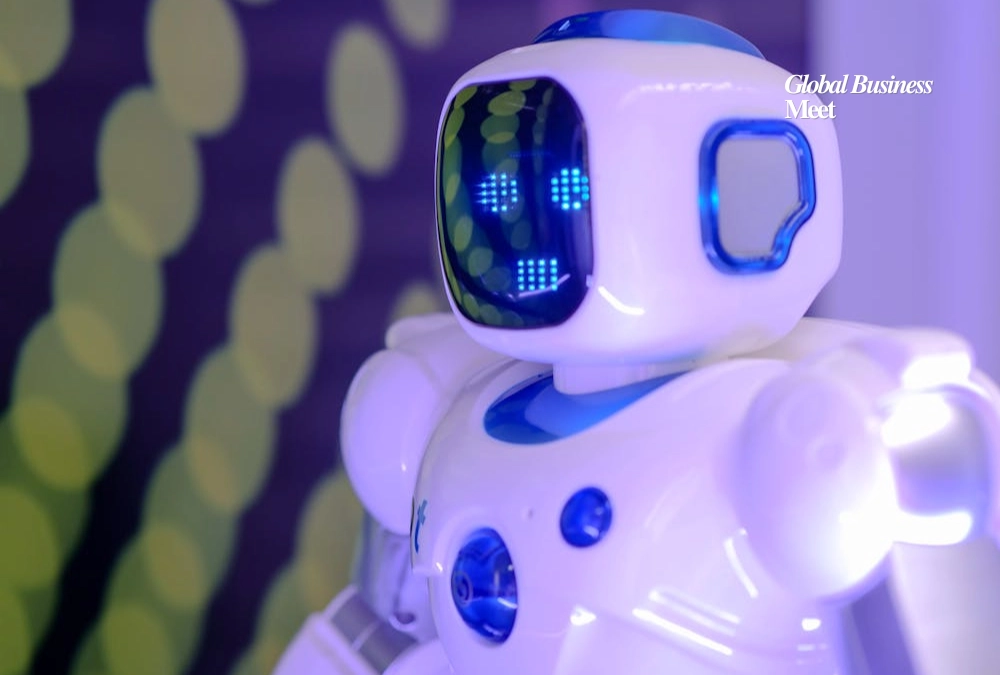

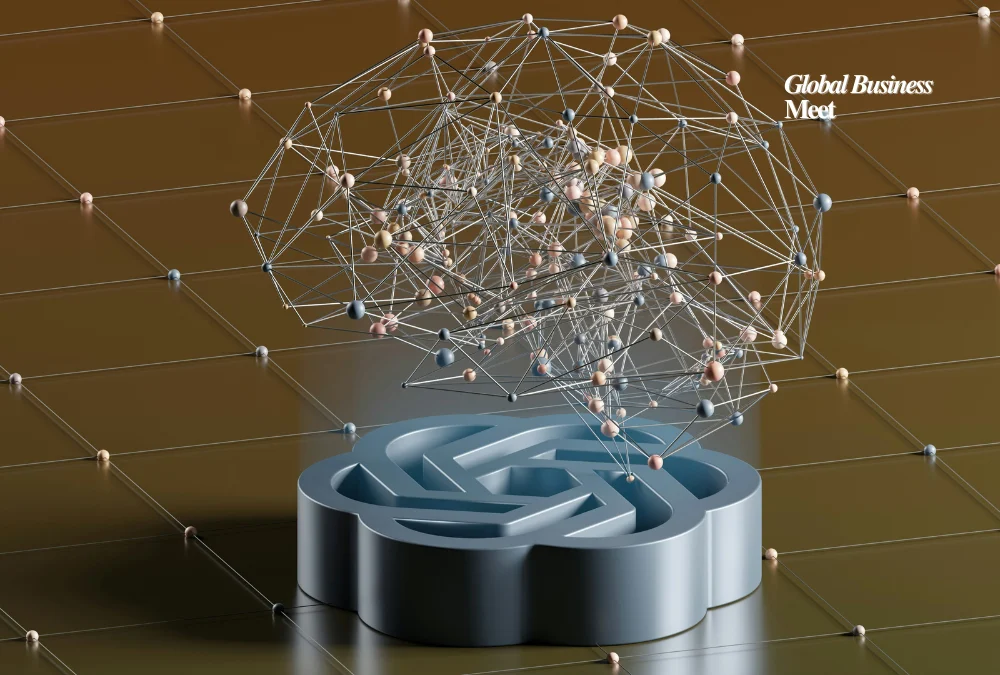

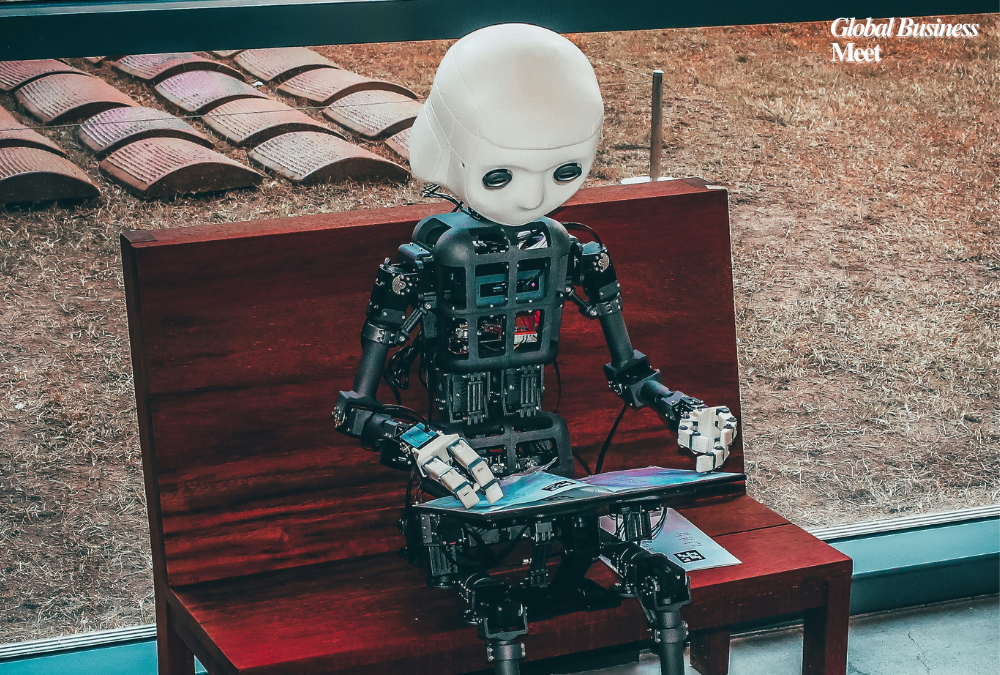

4. Meta Agent: Enabling Interactive Artificial Intelligence

The goal of the Meta Agent project is to create AI agents able to maintain constructive interactions with humans. The development of artificial intelligence capable of human behavior includes teaching it contextual understanding and textual interpretive abilities while allowing it to join forces toward goal achievement.

5. Meta Motivo: Behavioural Foundation model

Meta Motivo provides an unclassified human-motion dataset to develop behavioral modeling capabilities for implementing complex sequences of motions on virtual characters. The system creates virtual motions that seem authentic because it supports direct inference of environments and unpredicted situations.

Meta FAIR demonstrates its human-simulated AI development approach across five publications which concentrate on behavior and language as well as perception and spatial reasoning systems. The virtual assistant programming at Meta focuses on developing artificial intelligence systems capable of operating in numerous environments.