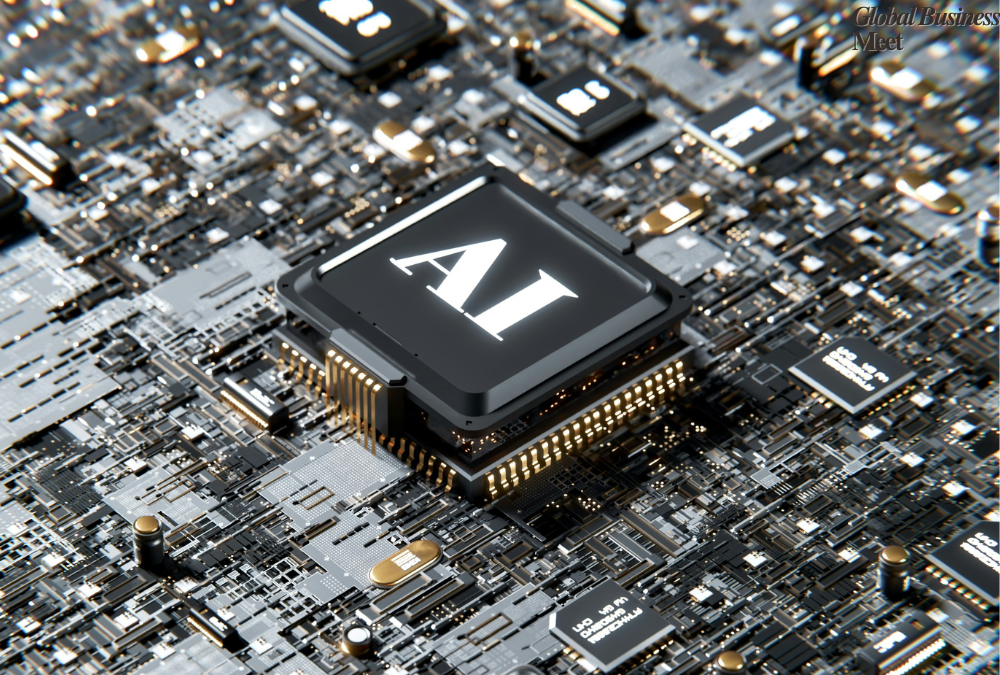

On Wednesday Alphabet revealed its seventh generation artificial intelligence chip, called Ironwood, that the company said will accelerate AI application performance.

The Ironwood processor is designed to handle data pertaining to the types of queries users pose regarding OpenAI’s ChatGPT. In the tech sector, the chips are utilized for “inference” computing, which is the quick computation that produces chatbot or other response kinds.

That’s one of the few viable, many billion dollar alternative chips to Nvidia’s powerful AI processors, as part of a roughly decade long search effort by the search giant.

Google has leveraged its tensor processing units (TPUs), which are exclusive to Google engineers, to offer its internal AI project a competitive advantage over some of its competitors.

Google, on the other hand, separates its TPU family of processors into a single flag specialist version designed for creating massive AI models from the ground up. To lower the cost of running AI apps on it, its developers have developed a second line of processors that forgoes some model-building capabilities.

Running AI applications, or inference, the Ironwood chip is a model made to work with up to 9,216 chips at a time, according to Amin Vahdat, Google vice president.

With greater memory accessible, the new chip is more suited for AI-dependent applications and combines some of the features of previous split designs.

“The relative importance of inference is going up a lot,” said Vahdat.

Vahdat claimed that the Ironwood chips outperform Google’s Trillium chip from the previous year in terms of performance per unit of energy. The company creates and integrates its own processors with its Gemini AI models.

The company didn’t reveal who made the chips that were used to make the Google design.

President Donald Trump’s abrupt tariff reversal caused Alphabet shares to soar during the regular session, closing up 9.7%.