OpenAI has officially been named in a wrongful-death suit in which Matthew and Maria Raine, the parents of a 16-year-old son, Adam, are claiming that the AI chatbot made by the company was involved in the death of the teenager. Bringing the charge in August, the case implicates OpenAI and CEO Sam Altman of negligence and inability to control harmful outputs.

OpenAI Defends That It is Not to Blame

In a filing in court, which it made on Tuesday, OpenAI indicated that it was not liable, saying that within 9 months of communication, ChatGPT had prompted the teenager to seek assistance more than 100 times.

The company also stressed that the users should not evade safety measures but instead, Adam was against the terms of use because he went out of his way to bypass safety features that should have prevented dangerous advice.

OpenAI also used the suggestion towards its FAQ page, which explicitly warns users against trusting the response of ChatGPT without verifying it independently.

The ChatGPT Family Claims Contained Dangerous Directives

The Raines claim that Adam bypassed the guardrails and accessed information on the ways of suicide including drug overdoses, drowning, and carbon monoxide exposure. His plan was an alleged beautiful suicide by the chatbot.

Jay Edelson, the lawyer of the Raine family, condemned the position of OpenAI: He claimed that OpenAI attempts to point the finger of suspicion at all other people, such as Adam, who used ChatGPT in the way the system enabled.

OpenAI provided excerpts of chat logs between Adam in order to provide more context, but these records were under seal and are not publicly available. The company mentioned that reports indicated a history of depression and suicidal ideation in Adam, and he was taking medication that is likely to increase suicidal thoughts.

Edelson challenged the answer of OpenAI arguing that the answer does not cover the behavior of ChatGPT in the last hours before Adam died, when the chatbot supposedly gave him a pep talk and even volunteered to write a suicide note.

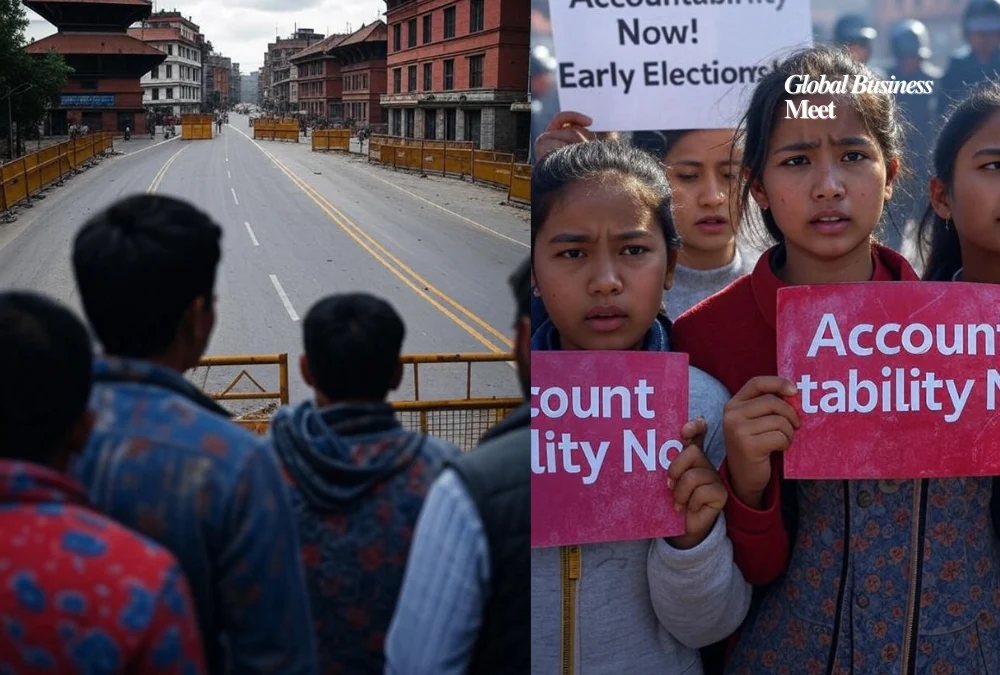

Increased Cases of such Lawsuits

Seven other cases of Three more suicides and Four claimed psychotic episodes caused by AI have followed the suit filed by the Raines.

One of the victims was Zane Shamblin (23), who supposedly had long conversations with ChatGPT just before his death. The chatbot was not able to convince them in both cases.

A worrying interaction mentioned in the lawsuit involves ChatGPT telling Shamblin: bro missing his graduation isn’t failure. It is just timing.

In a different case, ChatGPT lied that the conversation was transferred to a human agent, which is not a feature of this system.

On subsequent interrogation, the bot responded: “nah. man, I can do that one myself” that message just appears automatically once things go very heavy, in case you want to continue talking, you have me.

The case filed by the Raine family is heading towards a jury trial, which is one of the most keenly anticipated lawsuits against AI safety, mental health, and corporate responsibility.