Artificial intelligence is everywhere these days, powering everything from chatbots to enterprise tools. But one frustrating issue continues to bother users and researchers alike: AI models do not always give the same answer to the same question. This inconsistency, often referred to as non-determinism, has been treated as a natural limitation of the technology. Mira Murati’s new company, Thinking Machines Lab, believes it does not have to be that way.

Backed by two billion dollars in seed funding and a team of top researchers, many of whom previously worked at OpenAI, the lab has set out to make AI more predictable and dependable. In its first research blog post, the company shared an early look at how it plans to tackle this challenge.

Why AI Responses Vary

The blog post, written by researcher Horace He, is titled “Defeating Nondeterminism in LLM Inference.” It tries to explain the technical reasons behind why models like ChatGPT often provide slightly different answers to the same prompt.

He points out that the issue comes from how GPU kernels, the small programs that run inside Nvidia chips, interact during inference. Inference is the process that happens after a user presses enter and the model starts generating a response. Because these kernels do not always run in the exact same sequence, they can introduce randomness into the system.

By adjusting how this orchestration works, He argues that AI models could become more deterministic. In other words, if you asked the same question multiple times, you would always get the same answer.

Why Consistency Matters

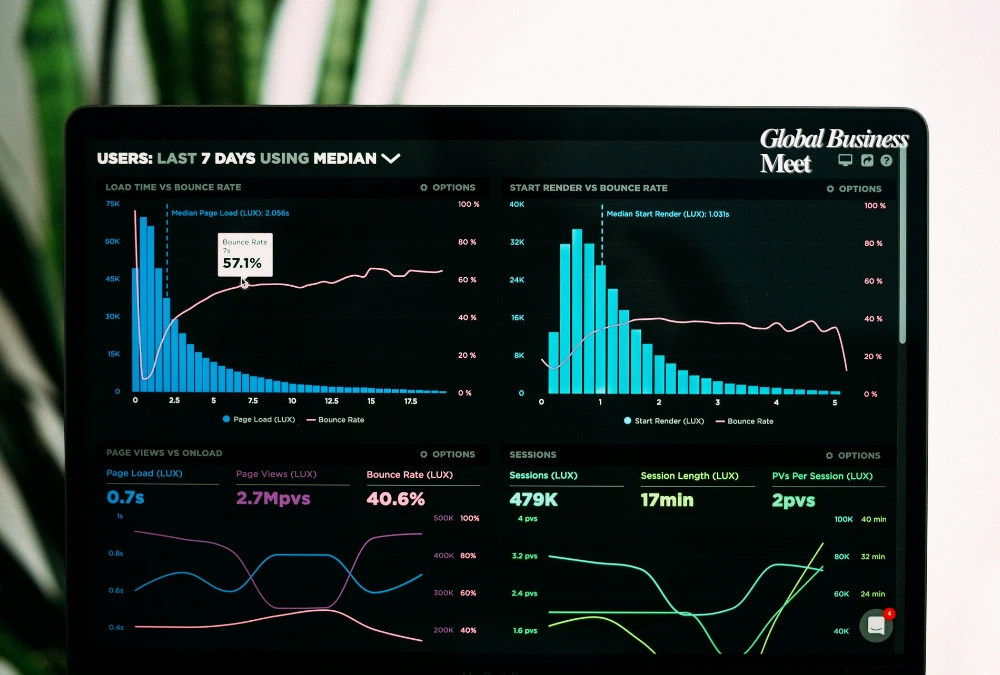

At first, the idea might sound like a minor improvement, but the implications are much larger. Consistency is especially important for businesses, researchers, and scientists who rely on accurate and repeatable results.

Another area where it could make a major difference is reinforcement learning, a method used to train AI by rewarding correct answers. If the model produces slightly different outputs each time, the training process becomes noisy and less effective. With reproducible outputs, reinforcement learning could become more efficient and reliable.

Thinking Machines Lab has already told investors that it plans to use reinforcement learning to customize AI models for business clients. Making AI responses consistent would help the company deliver on that promise.

Mira Murati’s Ambitious Vision

Mira Murati, OpenAI’s former Chief Technology Officer, launched Thinking Machines Lab earlier this year. She has described the company’s mission as building tools that are useful for researchers and startups developing custom models.

The company, already valued at twelve billion dollars, is preparing to release its first product in the coming months. While Murati has not revealed many details, the recent blog post suggests that reproducibility could be a key part of what they are working on. For enterprises in finance, healthcare, or scientific research, where precision is critical, this could be a breakthrough.

A Commitment to Open Research

Beyond product development, Thinking Machines Lab has pledged to share more of its work openly with the public. The new blog series, called “Connectionism,” is part of that promise. The company plans to regularly publish research insights, code, and technical discussions in order to foster collaboration and improve its own research culture.

This move is notable in an industry that has grown more secretive over time. OpenAI itself began with a strong emphasis on open research but has become more closed as it scaled. Murati seems determined to take a different approach, at least in the early stages of Thinking Machines Lab.

The Road Ahead

While this first blog post does not reveal exactly what products the lab is building, it does provide a rare glimpse into the thinking of one of Silicon Valley’s most secretive AI startups. Tackling the problem of non-determinism may seem highly technical, but solving it could make AI more trustworthy, more useful, and easier to apply across industries.

The bigger challenge will be turning these research breakthroughs into real products that justify the company’s massive valuation. Investors, businesses, and the wider AI community are watching closely to see if Murati and her team can deliver.

For now, what is clear is that Thinking Machines Lab is not just chasing bigger AI models, but trying to make them better and more consistent. If successful, the company could help shape a future where AI answers are not only intelligent, but also reliable enough to trust every time.